Local LLMs - Ollama and VSCode

Introduction

I had some time to play around with running LLMs locally and hooking those models up to VSCode to easily use them when coding. Explaining code, creating unit tests and code completion.

This short blog post explains an easy way to get up and running fast using Ollama and the CodeGPT extension.

I am assuming you are running Ollama on a Linux host.

Ollama

Ollama is a tool that you can use to run open-source large language models, such as Llama 2, locally. Ollama bundles model weights, configuration, and data into a single package, defined by a Modelfile. It optimizes setup and configuration details, including GPU usage.

The easiest way to run it is to download the single binary and run it.

Download and install the Ollama binary in a folder.

curl -L https://ollama.ai/download/ollama-linux-amd64 -o /usr/bin/ollama

chmod +x /usr/bin/ollamaRun Ollama. Ollama will listen on localhost port 11434 by default.

./ollama startOllama models

Ollama needs to download and use a model for it to be useful. You can see the models you can download here.

With the Ollama server running download a model. I am pulling the python version of the wizardcoder model as an example.

./ollama pull wizardcoder:pythonOther Ollama commands

List all Ollama models available in your server.

./ollama list

NAME ID SIZE MODIFIED

codellama:7b-instruct 8fdf8f752f6e 3.8 GB 2 days ago

codellama:7b-python 120ca3419eae 3.8 GB 2 days ago

codellama:latest 8fdf8f752f6e 3.8 GB 2 days ago

mistral:instruct 1ab49bc0b6a8 4.1 GB 2 days ago

mistral:latest 1ab49bc0b6a8 4.1 GB 2 days ago

wizardcoder:7b-python de9d848c1323 3.8 GB 2 days ago

wizardcoder:latest de9d848c1323 3.8 GB 2 days ago

wizardcoder:python de9d848c1323 3.8 GB 2 days agoPlay around with some prompts from the CLI

./olama run wizardcoder:python

>>> Can you help me with some Python code?

Sure, what kind of Python code would you like me to help you with?VSCode and CodeGPT

Now that we have Ollama up and running let’s install the VSCode exptension called CodeGPT and connect it up to our model. If you have Ollama running on a remote server make sure to make it available locally.

SSH and make Ollama available locally

As CodeGPT can not be configured to talk to a remote Ollama server we will need to make sure the Ollama server is locally available. SSH port-forwarding will do.

ssh -L 11434:localhost:11434 user@your_serverCodeGPT

CodeGPT is an extension for VSCode that can connect to different LLMs. We will use it to connect to out local LLM.

- Install the CodeGPT Extension in VSCode

- View -> Extensions and search for ‘CodeGPT’ and click Install

- Configure the CodeGPT Extension

- Click the configuration icon in the Extension view and choose Extension Settings

- _Code GPT: Api Key : Ollama

- _Code GPT: Model : wizardcoder:python

- Click the configuration icon in the Extension view and choose Extension Settings

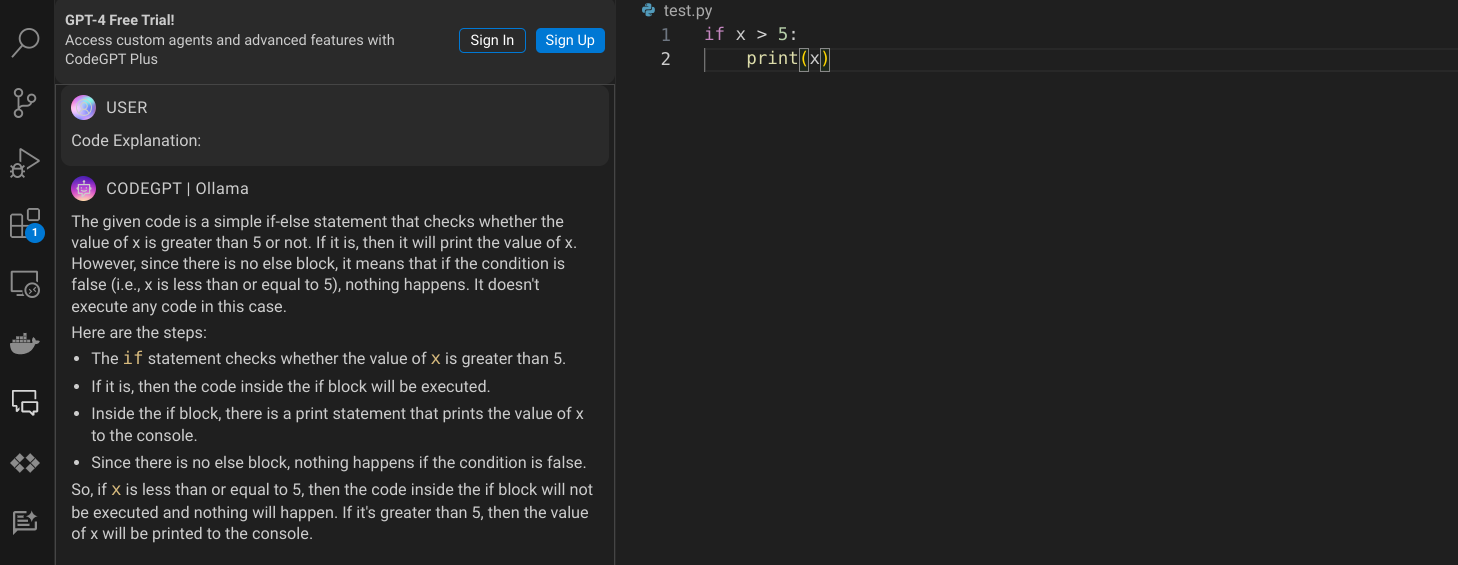

Now you can try it out on some Python code. Open up a random Python file, select some code, right click and choose Code GPT: Code Explanation